Link aggregation, in other words trunking, is a technique that helps to increase bandwidth by bundling multiple physical interfaces into a logical one, named Eth-trunk. Besides bandwidth increase, trunking lets us to implement load-balancing and ensure higher reliability.

Few restrictions must be taken into consideration when creating Eth-trunk interface:

- parameters of physical interfaces (number, transmission rate, duplex mode and traffic-control mode) on both ends of the trunk link must be consistent.

- data sequence must be unchanged (frames belonging to the same data flow are transmitted over the same physical link).

Eth-Trunk interfaces configured on S5700 switch support the following features:

- Layer 2 forwarding and Layer 3 forwarding (unicast and multicast).

- Hash algorithm-based load balancing.

- QoS on the trunk interface.

There are 2 methods of link aggregation:

- manual load balancing mode

Usually used if one of the devices, connected by Eth-trunk, does not support LACP. All active members interfaces forward data and perform load balancing. If an active link of the link aggregation group fails, traffic is balanced among the remaining active links. LACP is disabled.

- static LACP mode.

LACP is enabled. When physical interfaces are added to an Eth-Trunk interface, devices at both ends negotiate aggregation parameters and determine active and inactive interfaces by sending LACPDUs to each other. Inactive interfaces are used for redundancy backup. When an active link fails, the backup link with the highest priority replaces the failed link and changes its status to active. The device in the link aggregation group with a higher LACP priority is the Actor, and the device with a lower LACP priority is the Partner. In case when they have the same LACP priority, the device with smaller MAC address becomes the Actor.

The process of setting up an Eth-Trunk link in static LACP mode:

- LACP PDUs are sent between devices at both ends

- Based on LACP system priority the Actor is determined.

- Active interfaces are determined based on Actor’s LACP priority and interface ID (load-balancing is implemented across these active links).

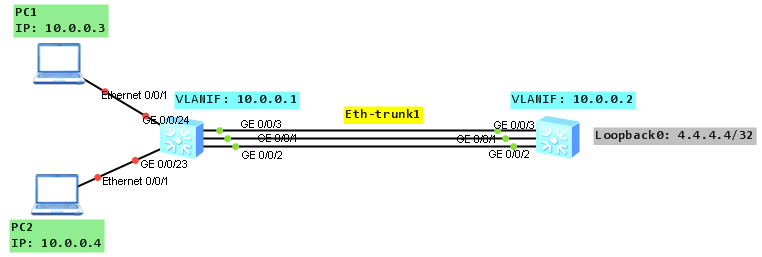

Let’s look at the topology:

As a configuration of manual load balancing mode is simple, we will focus on static LACP mode.

Based on the topology configure all necessary IP addresses:

labnario_1 # interface Vlanif100 ip address 10.0.0.1 255.255.255.0 # interface GigabitEthernet0/0/23 port link-type access port default vlan 100 # interface GigabitEthernet0/0/24 port link-type access port default vlan 100 labnario_2 # interface Vlanif100 ip address 10.0.0.2 255.255.255.0 # interface LoopBack0 ip address 4.4.4.4 255.255.255.255

Create Eth-trunk interface on both switches (labnario_1 as an example):

[labnario_1]interface Eth-Trunk 1 [labnario_1-Eth-Trunk1]bpdu enable [labnario_1-Eth-Trunk1]mode lacp-static

Add member interfaces to the Eth-trunk on both switches:

[labnario_1-Eth-Trunk1]trunkport GigabitEthernet 0/0/1 to 0/0/3 Info: This operation may take a few seconds. Please wait for a moment....done.

You can add members to Eth-trunk in interface view as well.

Set the system priority on labnario_1 to 10 so that it becomes the Actor and set maximum of active interfaces to 2:

[labnario_1]lacp priority 10 [labnario_1]interface Eth-Trunk 1 [labnario_1-Eth-Trunk1]max active-linknumber 2

Verify the configuration on both switches:

[labnario_1]dis eth-trunk 1 Eth-Trunk1's state information is: Local: LAG ID: 1 WorkingMode: STATIC Preempt Delay: Disabled Hash arithmetic: According to SIP-XOR-DIP System Priority: 10 System ID: 4c1f-cce4-15b4 Least Active-linknumber: 1 Max Active-linknumber: 2 Operate status: up Number Of Up Port In Trunk: 2 -------------------------------------------------------------------------------- ActorPortName Status PortType PortPri PortNo PortKey PortState Weight GigabitEthernet0/0/1 Selected 1000TG 32768 2 401 10111100 1 GigabitEthernet0/0/2 Selected 1000TG 32768 3 401 10111100 1 GigabitEthernet0/0/3 Unselect 1000TG 32768 4 401 10100000 1 Partner: -------------------------------------------------------------------------------- ActorPortName SysPri SystemID PortPri PortNo PortKey PortState GigabitEthernet0/0/1 32768 4c1f-ccb8-cad1 32768 2 401 10111100 GigabitEthernet0/0/2 32768 4c1f-ccb8-cad1 32768 3 401 10111100 GigabitEthernet0/0/3 32768 4c1f-ccb8-cad1 32768 4 401 10110000 [labnario_1]display trunkmembership eth-trunk 1 Trunk ID: 1 Used status: VALID TYPE: ethernet Working Mode : Static Number Of Ports in Trunk = 3 Number Of Up Ports in Trunk = 2 Operate status: up Interface GigabitEthernet0/0/1, valid, operate up, weight=1 Interface GigabitEthernet0/0/2, valid, operate up, weight=1 Interface GigabitEthernet0/0/3, valid, operate down, weight=1 [labnario_2]dis eth-trunk 1 Eth-Trunk1's state information is: Local: LAG ID: 1 WorkingMode: STATIC Preempt Delay: Disabled Hash arithmetic: According to SIP-XOR-DIP System Priority: 32768 System ID: 4c1f-ccb8-cad1 Least Active-linknumber: 1 Max Active-linknumber: 8 Operate status: up Number Of Up Port In Trunk: 2 -------------------------------------------------------------------------------- ActorPortName Status PortType PortPri PortNo PortKey PortState Weight GigabitEthernet0/0/1 Selected 1000TG 32768 2 401 10111100 1 GigabitEthernet0/0/2 Selected 1000TG 32768 3 401 10111100 1 GigabitEthernet0/0/3 Unselect 1000TG 32768 4 401 10110000 1 Partner: -------------------------------------------------------------------------------- ActorPortName SysPri SystemID PortPri PortNo PortKey PortState GigabitEthernet0/0/1 10 4c1f-cce4-15b4 32768 2 401 10111100 GigabitEthernet0/0/2 10 4c1f-cce4-15b4 32768 3 401 10111100 GigabitEthernet0/0/3 10 4c1f-cce4-15b4 32768 4 401 10100000

As you can see 2 ports are selected, valid and in UP state, LACP priority is 10, default port priority is 32768, default hash arithmetic is SIP-XOR-DIP (source and destination IP, it can be different on different switches).

Try to change GE0/0/1 and GE0/0/3 ports to be selected and make GE0/0/2 unselected, by configuring LACP priority on the interfaces. A smaller priority value indicates a higher LACP priority. Remember to enable LACP preemption function on Eth-trunk. The LACP preemption function ensures that the interface with the highest LACP priority always functions as an active interface, even if it comes back to UP state after a failure.

[labnario_1]int gig 0/0/1 [labnario_1-GigabitEthernet0/0/1]lacp priority 100 [labnario_1-GigabitEthernet0/0/1]int gig 0/0/3 [labnario_1-GigabitEthernet0/0/3]lacp priority 100 [labnario_1]int Eth-Trunk 1 [labnario_1-Eth-Trunk1]lacp preempt enable [labnario_1-Eth-Trunk1]lacp preempt delay 10

Let’s check what’s happened:

[labnario_1]dis eth-trunk 1 Eth-Trunk1's state information is: Local: LAG ID: 1 WorkingMode: STATIC Preempt Delay Time: 10 Hash arithmetic: According to SIP-XOR-DIP System Priority: 10 System ID: 4c1f-cce4-15b4 Least Active-linknumber: 1 Max Active-linknumber: 2 Operate status: up Number Of Up Port In Trunk: 2 -------------------------------------------------------------------------------- ActorPortName Status PortType PortPri PortNo PortKey PortState Weight GigabitEthernet0/0/1 Selected 1000TG 100 2 401 10111100 1 GigabitEthernet0/0/2 Unselect 1000TG 32768 3 401 10100000 1 GigabitEthernet0/0/3 Selected 1000TG 100 4 401 10111100 1 Partner: -------------------------------------------------------------------------------- ActorPortName SysPri SystemID PortPri PortNo PortKey PortState GigabitEthernet0/0/1 32768 4c1f-ccb8-cad1 32768 2 401 10111100 GigabitEthernet0/0/2 32768 4c1f-ccb8-cad1 32768 3 401 10110000 GigabitEthernet0/0/3 32768 4c1f-ccb8-cad1 32768 4 401 10111100

By default, the LACP preemption delay is 30 seconds.

Now we can check how hashing algorithm works. To do this we have to configure:

- Eth-trunk port link-type as trunk and allow VLAN 100 to be passed through the trunk.

- Static routing on labnario_1 to be able to ping loopback interface of labnario_2 and default routing on labnario_2 as well.

[labnario_1-Eth-Trunk1]port link-type trunk

[labnario_1-Eth-Trunk1]port trunk allow-pass vlan 100

[labnario_1]ip route-static 4.4.4.4 32 10.0.0.2

[labnario_2]ip route-static 0.0.0.0 0 10.0.0.1

[labnario_1]ping 4.4.4.4

PING 4.4.4.4: 56 data bytes, press CTRL_C to break

Reply from 4.4.4.4: bytes=56 Sequence=1 ttl=255 time=580 ms

Reply from 4.4.4.4: bytes=56 Sequence=2 ttl=255 time=60 ms

Reply from 4.4.4.4: bytes=56 Sequence=3 ttl=255 time=30 ms

Reply from 4.4.4.4: bytes=56 Sequence=4 ttl=255 time=30 ms

Reply from 4.4.4.4: bytes=56 Sequence=5 ttl=255 time=40 ms

--- 4.4.4.4 ping statistics ---

5 packet(s) transmitted

5 packet(s) received

0.00% packet loss

round-trip min/avg/max = 30/148/580 ms

Now we can ping from PC1 to 4.4.4.4 and check statistics of members of Eth-trunk interface:

PC>ping 4.4.4.4 -t

Ping 4.4.4.4: 32 data bytes, Press Ctrl_C to break

From 4.4.4.4: bytes=32 seq=1 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=2 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=3 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=4 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=5 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=6 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=7 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=8 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=9 ttl=255 time=15 ms

From 4.4.4.4: bytes=32 seq=10 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=11 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=12 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=13 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=14 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=15 ttl=255 time=63 ms

From 4.4.4.4: bytes=32 seq=16 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=17 ttl=255 time=46 ms

From 4.4.4.4: bytes=32 seq=18 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=19 ttl=255 time=32 ms

From 4.4.4.4: bytes=32 seq=20 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=21 ttl=255 time=32 ms

From 4.4.4.4: bytes=32 seq=22 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=23 ttl=255 time=31 ms

From 4.4.4.4: bytes=32 seq=24 ttl=255 time=47 ms

From 4.4.4.4: bytes=32 seq=25 ttl=255 time=47 ms

--- 4.4.4.4 ping statistics ---

26 packet(s) transmitted

26 packet(s) received

0% packet loss

round-trip min/avg/max = 15/38/63 ms

<labnario_1>dis int gig 0/0/1 | inc Unicast

Unicast: 26 packets, Multicast: 45 packets

Unicast: 26 packets, Multicast: 3 packets

<labnario_1>dis int gig 0/0/2 | inc Unicast

Unicast: 0 packets, Multicast: 19 packets

Unicast: 0 packets, Multicast: 19 packets

<labnario_1>dis int gig 0/0/3 | inc Unicast

Unicast: 0 packets, Multicast: 18 packets

Unicast: 0 packets, Multicast: 19 packets

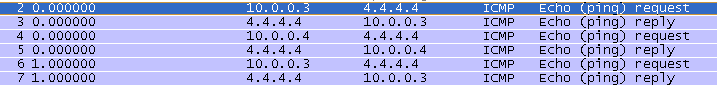

As you can see all traffic is going by interface GE0/0/1. This is per-flow behaviour. It means that frames belonging to the same data flow are transmitted over the same physical link.

And catched packets on GE0/0/1:

Labnario Huawei From Scratch

Labnario Huawei From Scratch